CemoBAM: Advancing Multimodal Emotion Recognition through Heterogeneous Graph Networks and Cross-Modal Attention Mechanisms

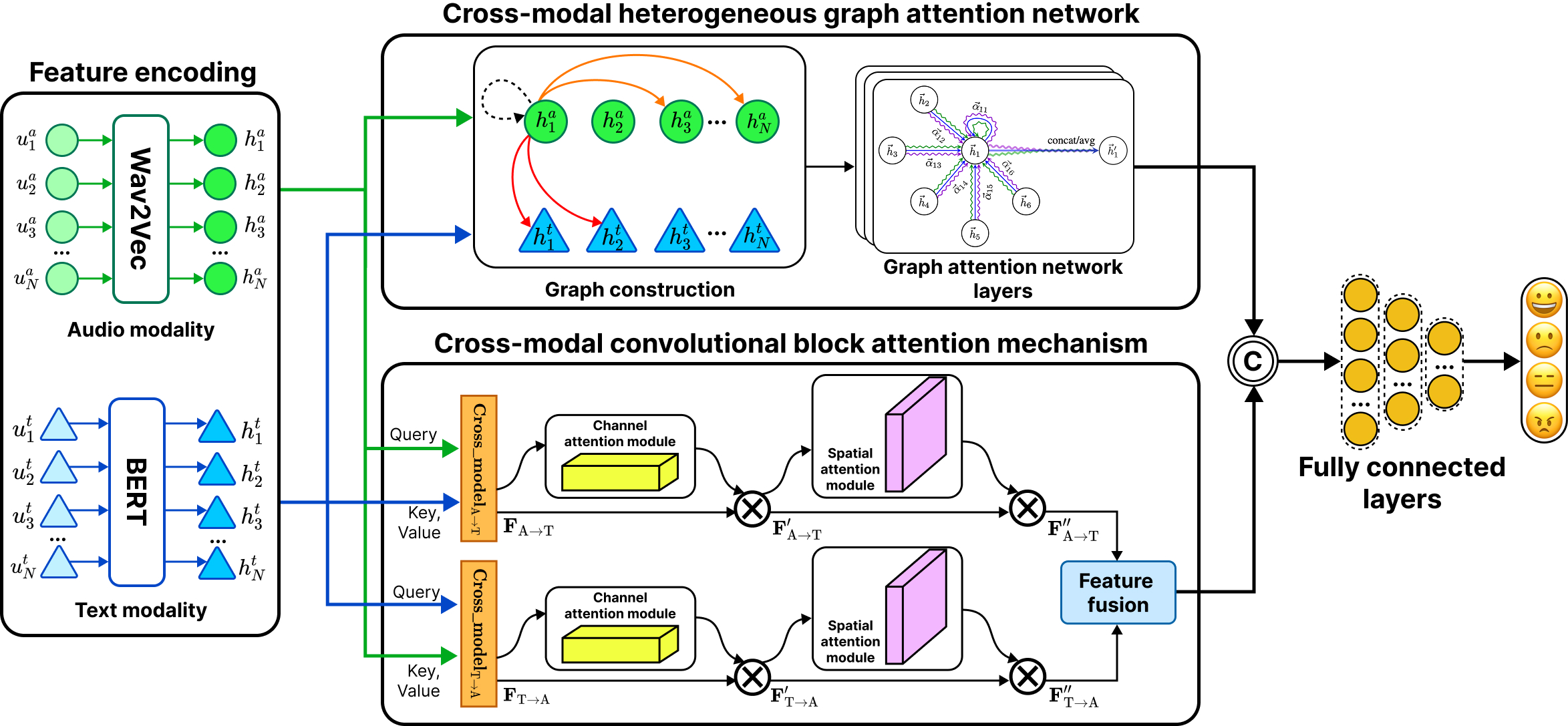

A dual-stream architecture that integrates a Cross-modal Heterogeneous Graph Attention Network (CH-GAT) with a Cross-modal CBAM fusion block.

Nhut Minh Nguyen · Thu Thuy Le · Thanh Trung Nguyen · Duc Tai Phan · Anh Khoa Tran · Duc Ngoc Minh Dang

Abstract

Multimodal Speech Emotion Recognition (SER) offers significant advantages over unimodal approaches by integrating diverse information streams such as audio and text. However, effectively fusing these heterogeneous modalities remains a significant challenge. We propose CemoBAM, a novel dualstream architecture that effectively integrates the Heterogeneous Graph Attention Network (CH-GAT) with the Cross-modal Convolutional Block Attention Mechanism (xCBAM). In CemoBAM architecture, the CH-GAT constructs a heterogeneous graph that models intra- and inter-modal relationships, employing multi-head attention to capture fine-grained dependencies across audio and text feature embeddings. The xCBAM enhances feature refinement through a cross-modal transformer with a modified 1D-CBAM, employing bidirectional cross-attention and channel-spatial attention to emphasize emotionally salient features. The CemoBAM architecture surpasses previous state-of-the-art (SOTA) methods by 0.32% on IEMOCAP and 3.25% on ESD datasets. Comprehensive ablation studies validate the impact of Top-K graph construction parameters, fusion strategies, and the complementary contributions of both modules. The results highlight CemoBAM’s robustness and potential for advancing multimodal SER applications.

Figure 1. Overview of the CemoBAM pipeline illustrating the CH-GAT and xCBAM fusion process for multimodal emotion recognition.

Summary

CH-GAT captures inter- and intra-modal relationships while xCBAM refines channel-spatial signals, enabling robust fusion on noisy speech datasets.

Why it matters

CemoBAM delivers +0.32% accuracy on IEMOCAP and +3.25% on ESD compared with prior multimodal SER systems by unifying graph reasoning and attention-based calibration.

Key ingredients

- Cross-modal Heterogeneous Graph Attention (CH-GAT)

- Cross-modal CBAM (xCBAM) refinement

- Gated dual-stream fusion with residual safeguards

Get Started Locally

Setup

git clone https://github.com/nhut-ngnn/CemoBAM.git

cd CemoBAM

conda create --name cemobam python=3.8

conda activate cemobam

pip install -r requirements.txtRun Experiments

# Grid-search top-k graph density

bash selected_topK.sh

# Default training run

bash run_training.sh

# Evaluate saved checkpoints

bash run_eval.shDatasets & Features

- IEMOCAP - four emotion classes with aligned audio-text transcripts.

- ESD - five multilingual emotion classes covering diverse speakers.

Pre-computed .pkl files for both modalities are provided to accelerate experimentation. Request access via the GitHub issues board if you need the processed features.

Evaluation Assets

- Attention visualisations for per-modality saliency exploration.

- Confusion matrices logged for every experiment run.

- Structured ablation scripts covering top-k, fusion strategy, and regularisation factors.

Cite This Work

If CemoBAM helps your research, please cite the APNOMS 2025 publication.

@inproceedings{nguyen2025CemoBAM,

title = {CemoBAM: Advancing Multimodal Emotion Recognition through Heterogeneous Graph Networks and Cross-Modal Attention Mechanisms},

author = {Nguyen, Nhut Minh and Le, Thu Thuy and Nguyen, Thanh Trung and Phan, Duc Tai and Tran, Anh Khoa and Dang, Duc Ngoc Minh},

booktitle = {2025 Asia-Pacific Network Operations and Management Symposium (APNOMS)},

address = {Kaohsiung, Taiwan},

year = {2025},

month = {September},

doi = {10.23919/apnoms67058.2025.11181320}

}Collaborators

- Nhut Minh Nguyen, FPT University, Vietnam

- Thu Thuy Le, FPT University, Vietnam

- Thanh Trung Nguyen, FPT University, Vietnam

- Duc Tai Phan, FPT University, Vietnam

- Anh Khoa Tran, Modeling Evolutionary Algorithms Simulation & AI, Vietnam

- Duc Ngoc Minh Dang, FPT University, Ho Chi Minh, Vietnam

Reach out via minhnhut.ngnn@gmail.com for collaborations, demo requests, or dataset access.

APNOMS 2025 · Kaohsiung, Taiwan

Presented at the 25th Asia-Pacific Network Operations and Management Symposium.

CemoBAM is part of an ongoing effort to advance emotionally aware multimodal AI systems.